Overview – Data Driven Testing

Data Driven Testing (DDT) is a popular testing approach, especially for functional testing. The same business requirement or use case can get different reactions when using different data. One of the most popular uses is entering forms. The basic aim of this approach is to design one test case and implement it with different data sets.

To use data-driven testing in this scenario, you can record a single automated test, and enter values to be used in the various fields. DDT will run the test with the different values.

Introduction

Although Data Driven Testing is used generally for Test Automation, it can also be implemented using Performance and Load testing tools like Apache JMeter. In some cases, using JMeter can be much faster and more effective for performing Data Driven Testing because we can perform dozens of tests very quickly without opening a browser and without consuming much resources.

The important point when performing DDT is to use “Response Assertion” to check whether the data actually passed or failed.

There are different ways to provide the data for Data Driven Testing using JMeter:

- CSV file

- Excel file

- XML

- Database

In this example we will use a CSV file for Data Driven Testing. We might write a future blog post about Data Driven Testing using Excel file. it is bit more complicated than CSV files.

Example – IOT Application

In our example we’ll be using an IOT (Internet of Things) application https://demo.thingsboard.io/ and adding new devices from our list. We will get the device information from our CSV data file.

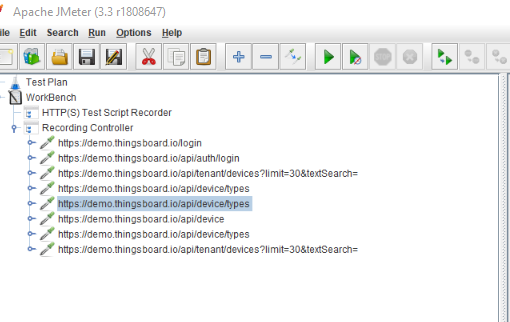

First, we record our IOT application device adding processes using JMeter’s “HTTP Test Script Recorder”. You can exclude the types of content you do not want to request (e.g. *.jpg, *.png, *.js, etc.) by selecting “Add Suggested Excludes” option on JMeter Test Script Recorder.

After the recording is completed, our script looks like this:

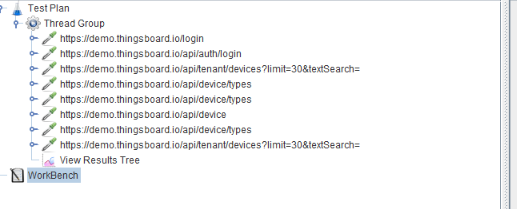

I have renamed it something meaningful, moved it from Recording Controller to Thread Group section and deleted the Recording Controller.

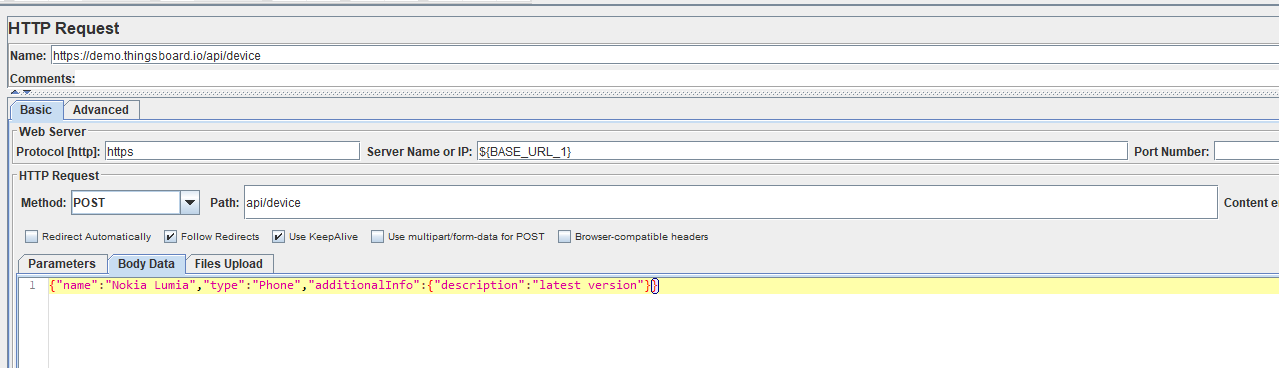

The following request contains “name” and ”type” descriptions. We implement Data Driven Testing for name and type variables.

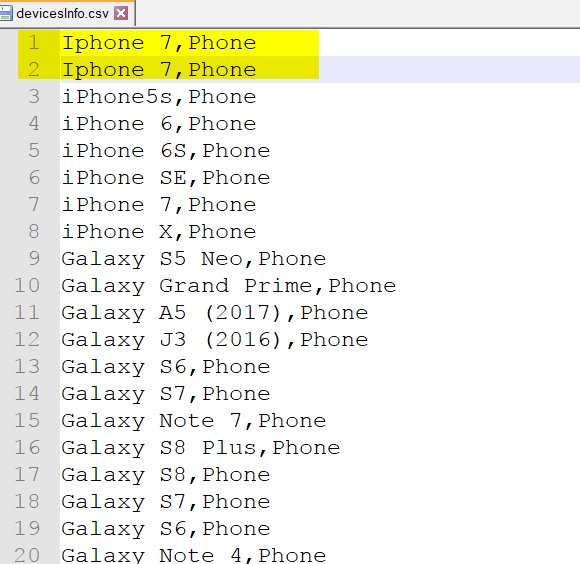

Each line of the CSV file has a device name followed by a comma and the device type. You can add more data types as they are required. In this example we are using two different data variables.

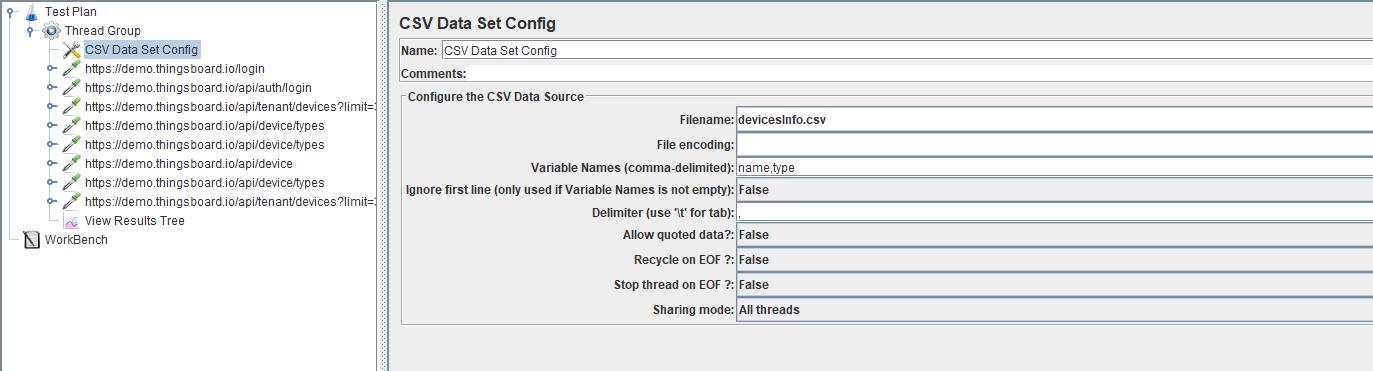

In order for the JMeter script to use our CSV file, which has all of the device names and types, we should add “CSV Data Set Config” config sampler. To do this: Right Click on Thread Group->Add->Config Element and select the CSV Data Set Config. After adding the CSV config file, we should add the right path for our CSV file.

The Variable Names section points to our CSV data file’s comma delimited parameters.

Now we need to configure and change the name and type fields to a dynamic value. JMeter variable syntax looks like this ${variablename}.

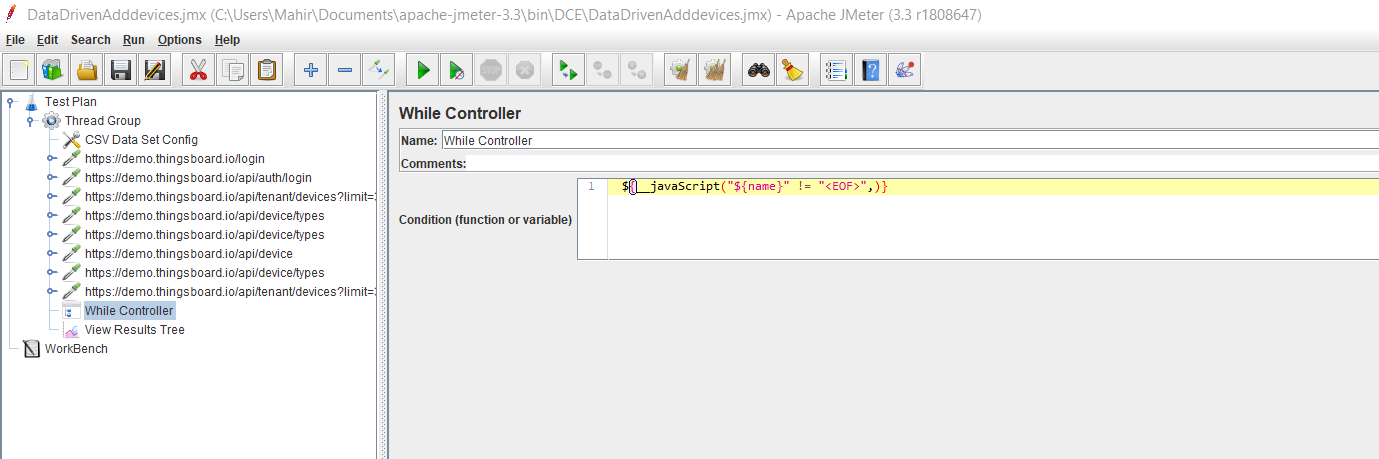

We will add the While Controller to the Thread Group so that the data in each row can work sequentially. We need to set the looping size to the number of rows in our CSV file. We do this by entering in the syntax that the name parameter defined above is EOF. ${__javaScript(“${name}” != “<EOF>”,)}

We then move our CSV data files and Device Add HTTP Request into the While Controller.

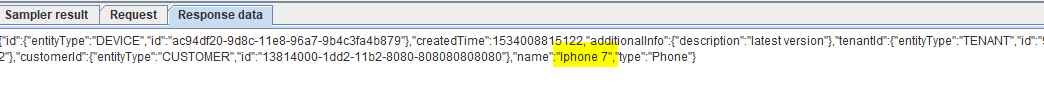

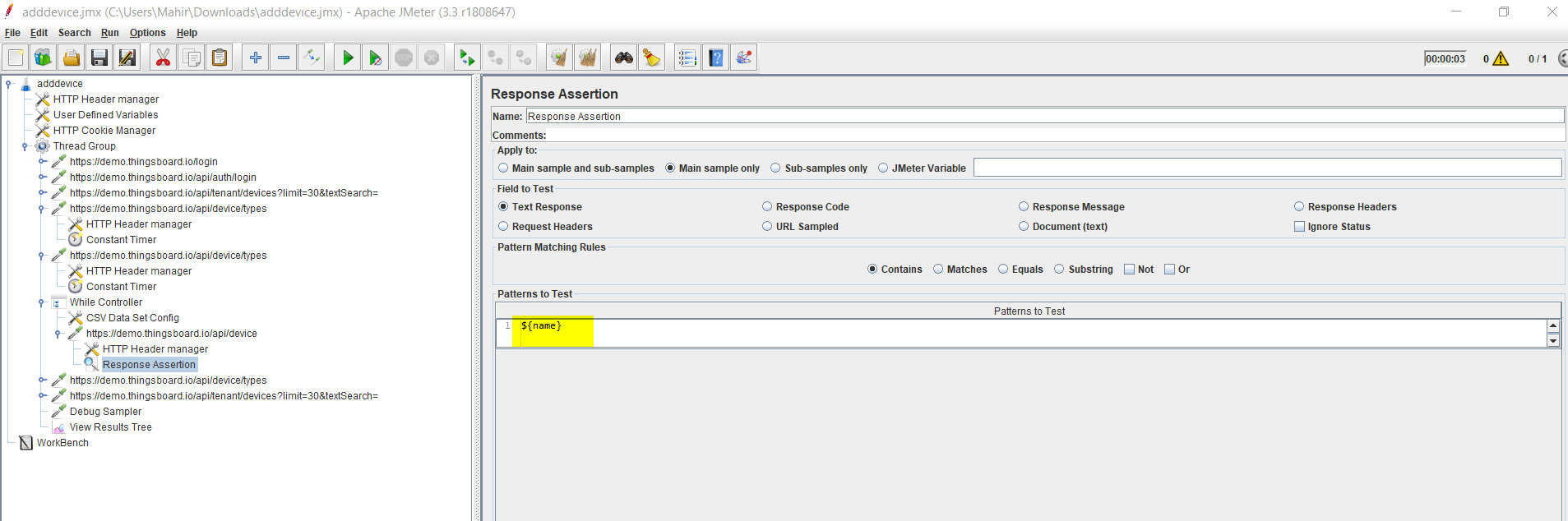

As we said above when performing the Data Driven Testing it is essential to use Response Assertion to determine whether the specific data passes or fails. So we will add the Response Assertion to our HTTP Request. Before configuring our Response Assertion we should know how the system reacts when it is successful or failed. If the device is added successfully the response of the page returns the added device name. In case of trying to add the same device name, it returns an error.

The response looks like this when successfully adding a device to the system:

In the case of the trying to add the same device name to the system, the response looks like:

After learning the system behavior, we can configure our Response Assertion.

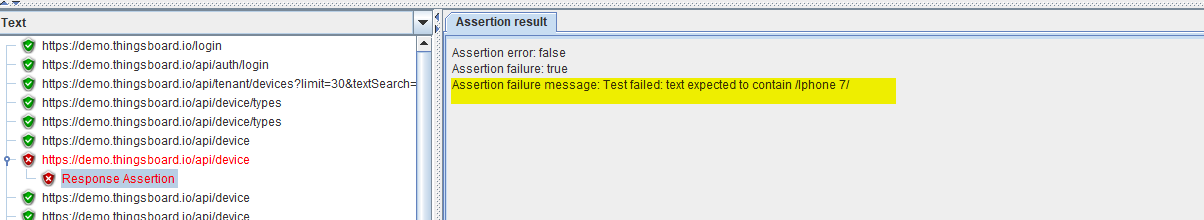

In order to see the Response Assertion’s work in failures situations, I intentionally added the same device names to our CSV data config file.

Before running the script, JMeter project file structure like this:

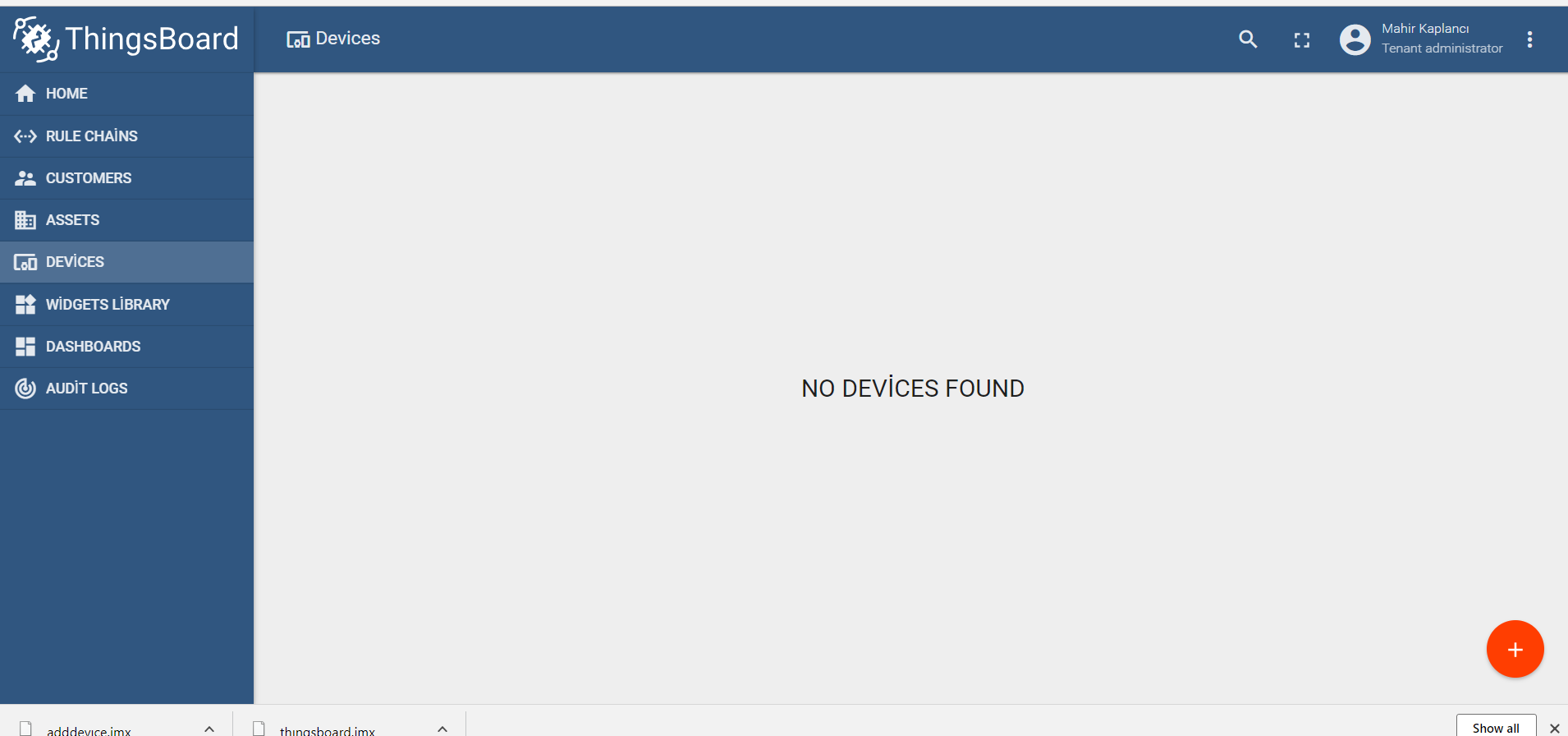

And our application has no devices.

When we run the our script, some requests failed because they were the not verified by Response Assertion:

After completing the scripts, devices are added into the system.

Scaling our Data Driven Testing

After checking that our script worked well, we can run this script, as you would with any JMeter script, on AWS (Amazon Web Services), to performing load test using RedLine13. Apache JMeter is one of the most popular tools for load testing and scaling out our JMeter test plan on the cloud in RedLine13 is easy. This guide and video walks you through running your first JMeter test on RedLine13.

That’s it.